How to batch download Azure Blobs or AWS S3 Files

Posted on February 20, 2023

5 minutes • 949 words • Other languages: Deutsch

If you are working in the cloud, you probably came across a requirement to Batch download files from cloud storage at some time. Wether you want to provide the reports of last year, files that were uploaded by users or other use cases - providing an archive of data is a not-so-uncommon use-case. However, when I researched if it’s possible to do this with Microsoft Azure, there were only examples like here (MSDN, external) where you would download the files either one-by-one or in parallel, but not in batch.

The article will first explain my journey to the current solution. For TLDR, click here.

The “easy” server-side solution

So when I came across the requirement, I implemented this “Best practise” approach and failed miserably. The flow that was executed was similiar to the following:

Client requests archive via RESTful-API

- Ask the API for a (time-limited, signed) link

- download the file on some temporary directory

- zip the whole content

- send the zip-file to the client

For a few, small files, this approach will even work fine. However, as soon, as the amount of data grows, you run into some severe problems:

- the HTTP-Server, Loadbalancer, Firewall, … or even the client might timeout

- the server is busy fulfilling the request and cannot handle the other requests properly anymore

- the temporary space might get filled up, so that no further download is possible

- the long transfer is interepreted as “stuck” by the infrastructure, so that the pod is killed

…and probably some others, that I forgot right now. Not a very viable solution, that has a lot (and I have experience by now with it) of potential to fail.

The “more-complex” server-side solution

As a realtime delivery of the data is not possible, my next solution was to offboard the task, which was also an inspiration to write my article about KEDA before. The flow now differed a bit for the client, but could work completely in the background:

Client requests archive via RESTful-API Server answers, that the request is processed in the background

- a new entry in redis is created

- this triggers an “archive-creation”-job

- KEDA will spawn a docker-container to process the job

The job then basically does the same as the server before, but does not send the result to the client. Instead, it will store it again on an azure BLOB storage and afterwards generates a link, that the client will receive.

The background job now creates a few other issues:

- the client does not have any information about the progress anymore (needs a mail on completion, sending progress via websocket or something else)

- there are a lot of docker container spawned over time, so that the cluster can run out of resources

- we are storing the files now 3 times (origin, runner, result-zip)

- the user might become impatient and start another job (needs to be prevented)

- there might be problems during the transfer, that needs a restart of the job or creates corrupt archives

- the temporary space can become full again

So even now, that we’ve removed the workload from our core services, we are still struggling with plenty of problems, that we don’t want to have and additionally, the process becomes more complex. If we could just send the files directly from Azure or AWS to the user. But that’s not possible… or is it?

A client-based solution for downloading the zip archive

Let’s go back to the original requirement: We want to

- provide multiple files from a BLOB Storage

- that are downloaded by the client

- eventually with a limit of parallel downloads

- eventually with throttling (e.g. waiting a few seconds after every batch)

- and stored in a zip file (potentially with folders etc.)

- and also share progress information

- without having to do the “hard work” by ourselves

Is that even possible, without an Azure or AWS batch download? Yes, it is!

I created a proof-of-concept in this repository here . The demo is written with Vue, but you really don’t need to understand Vue to get, what’s going on there:

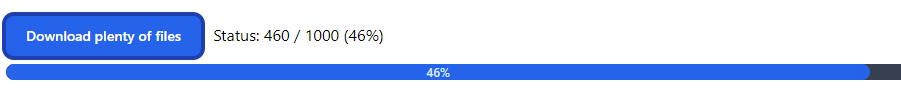

Whenever you click the Client Batch Download Example-Button, it will trigger the downloadAndStoreAsZip-function that tries to download 1000 files from a robot-image generator called robohash.org(which is pretty cool btw). Per default, the downloadInParallel-function now creates fetch-promises for 10 images at a time and downloads them as a blob into the RAM. If you want to, it could also “throttle” the next batch by waiting some seconds - otherwise it will continue until everything is downloaded. At the same time, the progress is updated after every batch that was downloaded, so that we can visualize it to the client. Finally, we use JSZip to zip everything and provide the download to the client.

That’s much better than any other solution, that we had before:

- the client sees the progress

- the data transfer is only done once and directly to the client

- we are utilizing the cloud infrastructure of Microsoft Azure Blobs or Amazon S3 without harming ourselves

- we can limit parallel downloads and wait between batches

- if a client needs to restart / loses connection, the traffic will be on their side

However, there are also two downsides:

- the whole files will be in RAM, so that there’s a limit on the client computer

- the data is transferred in the original size, and not compressed for the client

For the first issue, there’s a possible solution that is directly linked by our utilised FileSaver library: StreamSaver makes it possible, to write the blobs directly to the local hard disc while still downloading the other files.

For the second downside, I have to admit that I’m willing to accept it, because the benefits outweigh the downsides by far.